Creating an Offline Version of Page

A large client has a secure website where they assemble and create presentations consisting of a single Table of Contents page with many pdf's attached to it. They use the site to make presentations. This is a big client, a rich client, and they needed a way to guarantee they would be able to access the site. So I got this request:

Can you make an offline version of the Page that is always updated and available for download so we can download the offline version and present using that in case the website is down or more often, in case Internet Access is unavailable?

Update: I used COOKIE based authentication to secure this clients site, so that only logged in users can see anything at all, so how do I enable the curl requests to authenticate as well using the COOKIE of the requesting user in each request made by curl? Just add the users HTTP_COOKIE to the headers array used by curl like so:

array(

...

"Cookie: {$_SERVER['HTTP_COOKIE']}"

)

That now means the scraped version of the page is an exact duplicate that the user is looking at. Very sweet!

I can GET anything

So, here's what I hacked together last night, that is being used today. It's essentially 2 files.

So, here's what I hacked together last night, that is being used today. It's essentially 2 files.

- A php file that scrapes uses curl to scrape all the urls for the page (favicon, css, images, pdfs, etc..)

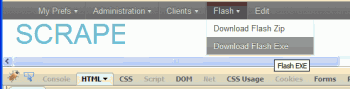

- A simple bash shell script acting as a cgi that creates a zip file of all the urls, and a self-extracting exe file for those without a winzip tool

The PHP File

This is a simple script that is given 2 parameters:

- The url to scrape

- The type of download to return

scrapeit.php

$ch_info,

'curl_errno' => curl_errno($ch),

'curl_error' => curl_error($ch)

), 1));

curl_close($ch);

return $g;

}

/**

* _mkdir() makes a directory

*

* @return

*/

function _mkdir($path, $mode = 0755)

{

$old = umask(0);

$res = @mkdir($path, $mode);

umask($old);

return $res;

}

/**

* rmkdir() recursively makes a directory tree

*

* @return

*/

function rmkdir($path, $mode = 0755)

{

return is_dir($path) || (rmkdir(dirname($path), $mode) && _mkdir($path, $mode));

}

The following should be in a couple functions, but I was running on a tight time schedule, and hey this $hitt aint free... wait yes it is, always.

// Ok lets get it on!

// first lets setup some variables

if (!isset($_GET['url']) || empty($_GET['url']))die();

$td = $th = $urls = array();

$FDATE = date("m-d-y-Hms");

$FTMP = '/web/askapache/sites/askapache.com/tmp';

$fetch_url = $_GET['url'];

$fu = parse_url($fetch_url);

$fd = substr($FTMP . $fu['path'], 0, - 1);

$FEXE = "{$fd}-{$FDATE}.exe";

$FZIP = "{$fd}-{$FDATE}.zip";

// now this is a shortcut to download the css file and add all the images in it to the img_urls array

$img_urls = array();

$gg = preg_match_all("/url(([^)]*?))/Ui", gogeturl('https://www.askapache.com/askapache-0128770124.css'), $th);

$imgs = array_unique($th[1]);

foreach($imgs as $img)

{

// only because all the links are relative

$img_urls[] = 'https://www.askapache.com' . $img;

}

// now fetch the main page, and assemble an array of all the external resources into the urls array

$gg = preg_match_all("/(background|href)=(["'])([^"'#]+?)(["'])/Ui", gogeturl($fetch_url), $th);

foreach($th[3] as $url)

{

if (strpos($url, '.js') !== false)continue;

if (strpos($url, 'wp-login.php') !== false || $url == 'https://www.askapache.com/') continue;

if (strrpos($url, '/') == strlen($url) - 1)continue;

if (strpos($url, 'https://www.askapache.com/') === false)

{

if ($url[0] == '/') $urls[] = 'https://www.askapache.com' . $url;

else continue;

}

else $urls[] = $url;

}

// now create a uniq array of urls, then download and save each of them

$urls = array_merge(array_unique($img_urls), array_unique($urls));

foreach($urls as $url)

{

$pu = parse_url($url);

rmkdir(substr($fd . $pu['path'], 0, strrpos($fd . $pu['path'], '/')));

gogeturl2($url, $fd . $pu['path']);

}

// deletes dir ie. /this-page/this-page/ when it should be /this-page/index.html

if (is_dir($fd . $fu['path'])) rmdir($fd . $fu['path']);

// now save the page as index.html

gogeturl2($fetch_url, $fd . '/index.html');

// fixup to be able3 to parse

$g = file_get_contents($fd . '/index.html');

$g = str_replace('', 'script>!-->', $g);

$g = str_replace('href="https://www.askapache.com/', 'href="/', $g);

$g = str_replace('src="https://www.askapache.com/', 'src="/', $g);

$g = str_replace('href="/', 'href="', $g);

$g = str_replace('src="/', 'src="', $g);

$g = str_replace("href='https://www.askapache.com/", "href='/", $g);

$g = str_replace("src='https://www.askapache.com/", "src='/", $g);

$g = str_replace("href='/", "href='", $g);

$g = str_replace("src='/", "src='", $g);

file_put_contents($fd . '/index.html', $g);

// fixup for css file

foreach($urls as $url)

{

if (strpos($url, '.css') !== false)

{

$fuu = parse_url($url);

$css = file_get_contents($fd . $fuu['path']);

$css = str_replace('url(/', 'url(../', $css);

file_put_contents($fd . $fuu['path'], $css);

}

}

// my favorite technique, using fsockopen to initiate a shell script server-side.

// passing the args in the HTTP Headers... genius!!

// close the sucker fast with HTTP/1.0 and connection: close

$fp = fsockopen ($_SERVER['SERVER_NAME'], 80, $errno, $errstr, 5);

fwrite($fp, "GET /cgi-bin/sh/zip.sh HTTP/1.0rnHost: www.askapache.comrnX-Pad: {$fd}rnX-Allow: {$FDATE}rnConnection: Closernrn");

fclose($fp);

// loop until the file created by /cgi-bin/sh/zip.sh is found

$c = 0;

do

{

$c++;

sleep(1);

clearstatcache();

if (is_file("{$FEXE}")) continue;

}

while ($c < 20);

// either zip or exe

$type = $_GET['type'];

if ($type == 'zip') $file = $FZIP;

else $file = $FEXE;

// wow great debugging dude

error_log($file);

// if the file is there, do a 302 redirect to initiate download

if (file_exists("{$file}"))

{

@header("HTTP/1.1 302 Moved", 1, 302);

@header("Status: 302 Moved", 1, 302);

@header('Location: https://www.askapache.com/' . basename($file));

exit;

}

exit;

?>

zip.sh

#!/bin/sh

# all you need for cgi

echo -e "Content-type: text/plainnn"

# blank that run log

echo "" > /web/askapache/sites/askapache.com/tmp/run.log

# redirect 1 and 2 to the run log for the whole script

exec &>/web/askapache/sites/askapache.com/tmp/run.log

# basename

N=${HTTP_X_PAD//*/}

# date-based

NN=${HTTP_X_ALLOW}

# create recursively the dir tree

mkdir -pv $HTTP_X_PAD

# cd to the tmp

cd /web/askapache/sites/askapache.com/tmp

# the zip version with date

F=$N-$NN.zip

# the exe version with date

NN=$N-$NN.exe

# for debugging, only goes to run log

echo "F=$F"

echo "NN=$NN"

echo "N=$N"

# create a relative (r is recursive) archive of the entire dir

/usr/bin/zip -rvv $F $N

# add the self-extracting stub to the archive

/bin/cat unzipsfx.exe $F > $NN

# fix the sfx stub

/usr/bin/zip -A $NN

# move both the exe and zip to the web-docroot to be dl'd directly

cp -vf $NN /web/askapache/sites/askapache.com/htdocs/

cp -vf $F /web/askapache/sites/askapache.com/htdocs/

# sleep for 60 seconds and then rm all the files, so you better download that file fast

sleep 60 && rm -rvf $HTTP_X_PAD $F $NN /web/askapache/sites/askapache.com/htdocs/$NN /web/askapache/sites/askapache.com/htdocs/$F

#suh suh cya

exit 0;

Creating SFX Archives

The best way is 7z, but I couldn't get p7zip's sfx module to work and didn't have time to compile it. Instead I just used the stub available here: curl -O -L ftp://ftp.info-zip.org/pub/infozip/win32/unz552xn.exe which works great but no customization like password, icons, etc.. oh well.

Implementation

Simple, just create a link to the php file with the url and type parameters. /cgi-bin/php/scrapeit.php?url=https://www.askapache.com/htaccess/&type=exe or if you integrate into wordpress like I did you can add this to your header or admin_bar and use the get_permalink() for the url arg.

Lock It Down

This was used on a private site so what I did was add some code to the scrapeit.php file that just copied the HTTP_COOKIE value sent by the requesting user and sent that as part of the request in the fsockopen request. That means only logged in users can do this, and furthermore, if a user doesn't have access to a page and tries to use this to circumvent, they can't. And also htaccess was used to limit the scripts to only allow the ip's running the server to make connections.

Conclusion

What can't be done with linux, bash, http, php, and a little server-side finesse? My clients are very happy, and I had some fun!

« Ultimate Htaccess Part IIAdding YouTube Videos To Website, 4 Methods »

Comments