Optimizing Servers and Processes for Speed with ionice, nice, ulimit

Ok, sup. I really felt I had to get this out of the way, because I have a whole stack of drafts waiting to be published, but I realized that not many people will benefit from all the advanced optimizations and tricks I'm writing unless they get a basic understanding of some of the tools I'm using. I decided to write a series of articles explaining how I optimize servers for speed because lately I've been getting a lot more people wanting to hire me to do that. I take on projects when I can but there is clearly a need out here on the net for some self-help. The momentum is swinging more and more towards VPS type of web hosting, and I would say that 99% of those customers are getting supremely ripped off, which goes against the foundation of the web.

Keep in mind that this blog and my research is only a hobby of mine, my job is primarily marketing and sales, so I'm not some licensed expert or anything, or even an unlicensed expert! haha. But it does bother me that those who are tech-savvy enough to run web-hosting companies are happily ripping people off. So this article details the main tools that are used to speed up and optimize your machine by delegating levels of priority to specific processes. Future articles will use these tools alot, so this is meant as an intro.

CPU and Disk I/O

As most of you are aware, there are 2 variables that determine any computer or programs speed. CPU and Disk I/O. CPU determines how fast you can process data, crunch numbers, etc. while disk I/O determines how fast your disks can read and write data to the hard-drive. Wouldn't it be great if you could easily configure your server to give your httpd, php, and other processes both greater CPU processing and disk IO than your non-important processes like backup scripts, ftp daemons, etc.? We are talking about Linux in this article, so of course YES not only can you do that, you should!

RAM

RAM is like a hard-drive in that data is stored on it, and read/written to it. The difference is that RAM is somewhere around 30x faster than disk I/O, but the cost of that incredible speed is that the data stored on it is only temporary in the sense that it won't be stored permanently, it is completely erased when your machine is rebooted. RAM is also expensive, and there is a limit to how much a server or machine can have due to hardware limits.

SWAP

SWAP takes off when you run out of RAM but you still want certain data to be read/write quickly. Basically when you start running out of RAM your machine starts supplementing RAM with SWAP storage. SWAP is usually a partition on a second hard-drive disk. There is an upper limit on how much I/O can occur on a disk at one time, and the more I/O takes place, the slower all I/O becomes, so SWAP works well on a separate hard-drive as it will have much faster I/O. On Windows they opted to copy the SWAP mechanism but instead use a file named pagefile.sys, and that is just one reason people in the know do not care for Windows.

CPU

So lets do this, think of your CPU (your processor) as having an amount of 100% processing available when not being used, 0% when its maxed out. CPU's handle multiple processing tasks simultaneously, so what we will discuss in this article is how to specify HOW MUCH of that processing amount each of your programs (heretofore "processes") are able to use. Yes, very very cool.

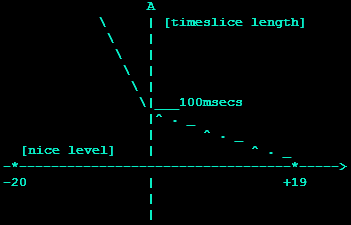

That is correct, you can easily configure your server to provide more of the available processing time to certain programs over others, like you can configure apache and php to utilize 50% of your CPU processing time by themselves, so that all other processes (proftpd, sshd, rsync, etc.) combined can only utilize 50%. The terminology is we can give certain specific processes (like php.cgi, httpd, fast-cgi.cgi) a specific priority, where -19 is the most priority, and +19 is the least amount of priority, or CPU processing time. I know it seems backwards..

The Tools

If you run Windows, you are in the right place... because the following advice will save your life: GET LINUX! Ok, now that that is out of the way, the following are the tools dicussed on this page. All of them are free, open-source, and wonderful. The basic idea of these tools is to control how much CPU is devoted to each process, and also how much Disk IO/Disk traffic is given to each process.

- nice

- run a program with modified scheduling priority

- renice

- alter priority of running processes

- ionice

- set or retrieve the I/O priority for a given pid or execute a new task with a given I/O priority.

- iostat

- Report Central Processing Unit (CPU) statistics and input/output statistics for devices and partitions.

- ulimit

- Ulimit provides control over the resources available to processes started by the shell, on systems that allow such control.

- chrt

- set or retrieve real-time scheduling parameters for a given pid or execute a new task under given scheduling parameters.

- taskset

- set or retrieve task CPU affinity for a given pid or execute a new task under a given affinity mask.

Part 1: Process Processes Faster

Ok so lets tackle figuring out how to give your response-intensive processes (like apache, php, ruby, perl, java) meaning a request to your server/machine requires a response. For instance, when you requested this page that you are reading at this very second, several things on my server had to happen for you to be able to read this.

First your computer sends out a request to see what server the www.askapache.com domain name is. DNS servers respond with my server IP, so for servers dedicated as nameservers, optimizing the DNS processes like bind would speed that up. Now that your computer knows how to reach my server it sends an HTTP GET request for this url. This request is received by the httpd process that is apache, and apache determines this url should be handled by my custom compiled php5.3.0 binary, because this page is WordPress generated. So the php binary loads up the WordPress /index.php file, which chain-loads several other php files, including wp-config.php containing my MySql database settings. Now php connects to my MySql Server to fetch this articles content, comments, title, tags, etc. and then generates the HTML and hands that back to Apache.

Finally, Apache generates a HTTP RESPONSE and sends the RESPONSE and CONTENT back to your Browser, which then in turn renders the page for your eyes with the necessary javascript, images, css, and other files included in the HTML response.

Too much Processing

Now you see why I've opted to write my own caching plugin that takes the php and mysql processes OUT of that equation. Both the php binary and the mysql instance consume CPU processing, and disk IO, to load all their library files, make various network requests and sockets, check permissions, and on and on. And that's completely ok, the thing is, unless you configure these processes (Apache, PHP, MySQL) they will use the same amount of CPU processing that other processes use, other processes that have very little to do with you reading this sentence. Processes to run my mail server, my FTP server, my SSH server, my cronjobs, cleanup scripts, atd daemon, etc.. and they will get the same amount of CPU!

Another even simpler example is what got me to look into this myself. I wrote a shell script that created hourly, daily, weekly, and monthly backups for all of my websites and sql databases, and set it up to run by cronjob at those set intervals. Eventually I noticed my sites were slower, my php even slower, and sometimes I even saw 503 errors that my host throws up when my server is overloaded. The research that I pursued to prevent that from happening has been hugely eye-opening. What does a backup script do? Mine just created tar archives of all the files in my web root, then gzipped the tar archive saving to a backup server using scp (a file transfer using ssh). This resulted in the following huge problems that seem to have nothing to do with a faster server and speedier website, but they have everything to with it.

- CPU Bottleneck #1 - tar and gzip use compression algorithms at a low level to create a compressed version, and all that compressing uses a whole lot of crunching - CPU processing

- DISK IO Bottleneck - Tarring the whole web root directory was creating a ton of disk io, and remember the more disk io that is going on, the less is available for everything else.

- CPU Bottleneck #2 - Using scp to send my backups was security-smart, but these huge archive files had to be encrypted and sent over the net.

Breaking Bottles

I apologize for being a little long-winded there, but I think it's important to make sure everyone understands those basic concepts, which are foreign to most people. Once you understand what is causing the bottlenecks, then you can understand the solutions, which actually are incredibly simple and even a novice linux user can easily do. Besides, the net gets a little bit faster every time someone implements this.

nice

Nice allows you to run a program with modified scheduling priority which specifies how much CPU is devoted to a particular process. Run COMMAND with an adjusted niceness, which affects process scheduling. With no COMMAND, print the current niceness.

Nice allows you to run a program with modified scheduling priority which specifies how much CPU is devoted to a particular process. Run COMMAND with an adjusted niceness, which affects process scheduling. With no COMMAND, print the current niceness.

Nicenesses range from -20 (most favorable scheduling) to 19 (least favorable). -n, --adjustment=N - add integer N to the niceness (default 10). nice +19 tasks get a HZ-independent 1.5%. Running a nice +10 and a nice +11 task means the first will get 55% of the CPU, the other 45%.

nice usage

nice [OPTION] [COMMAND [ARG]...] -n, --adjustment=ADJUST increment priority by ADJUST first

Examples of nice

Using nice to download a file

nice -n 17 curl -q -v -A 'Mozilla/5.0' -L -O http://wordpress.org/latest.zip

Unzipping a file with nice

nice -n 17 unzip latest.zip

Nice way to build from source

nice -n 2 ./configure nice -n 2 make nice -n 2 make install

It is sometimes useful to run non-interactive programs with reduced priority.

$ nice factor `echo '2^9 - 1'|bc` 511: 7 73

Since nice prints the current priority, we can invoke it through itself to demonstrate how it works: The default behavior is to reduce priority by 10.

$ nice nice 10 $ nice -n 10 nice 10

The ADJUSTMENT is relative to the current priority. The first nice invocation runs the second one at priority 10, and it in turn runs the final one at a priority lowered by 3 more.

$ nice nice -n 3 nice 13

Specifying a priority larger than 19 is the same as specifying 19.

$ nice -n 30 nice 19

Only a privileged user may run a process with higher priority.

$ nice -n -1 nice nice: cannot set priority: Permission denied $ sudo nice -n -1 nice -1

The new scheduler in v2.6.23 addresses all three types of complaints:

To address the first complaint (of nice levels being not "punchy" enough), the scheduler was decoupled from 'time slice' and HZ concepts (and granularity was made a separate concept from nice levels) and thus it was possible to implement better and more consistent nice +19 support: with the new scheduler nice +19 tasks get a HZ-independent 1.5%, instead of the variable 3%-5%-9% range they got in the old scheduler.

To address the second complaint (of nice levels not being consistent), the new scheduler makes nice(1) have the same CPU utilization effect on tasks, regardless of their absolute nice levels. So on the new scheduler, running a nice +10 and a nice 11 task has the same CPU utilization "split" between them as running a nice -5 and a nice -4 task. (one will get 55% of the CPU, the other 45%.) That is why nice levels were changed to be "multiplicative" (or exponential) - that way it does not matter which nice level you start out from, the 'relative result' will always be the same.

The third complaint (of negative nice levels not being "punchy" enough and forcing audio apps to run under the more dangerous SCHED_FIFO scheduling policy) is addressed by the new scheduler almost automatically: stronger negative nice levels are an automatic side-effect of the recalibrated dynamic range of nice levels.

renice

Renice is similar to the nice command, but it lets you modify the nice of a currently running process. This is nice for shell scripts where you can add this to the top of the script to nicify the whole script to 19.

renice usage

renice priority [ [ -p ] pids ] [ [ -g ] pgrps ] [ [ -u ] users ] -g Force who parameters to be interpreted as process group ID's. -u Force the who parameters to be interpreted as user names. -p Resets the who interpretation to be (the default) process ID's.

Examples of renice

From the shell, changes the priority of the shell and all children to 19. From a shell script, does the same but only for the script and its children.

renice 19 -p $$

This runs renice without any output

renice 19 -p $$ &>/dev/null

10 gets more CPU than 19

renice 10 -p $$

change the priority of process ID's 987 and 32, and all processes owned by users daemon and root.

renice +1 987 -u daemon root -p 32

Part 2: Optimizing Disk I/O

Linux Scheduling Policies

The scheduler is the kernel component that decides which runnable process will be executed by the CPU next. Each process has an associated scheduling policy and a static scheduling priority, sched_priority

Processes scheduled under one of the real-time policies (SCHED_FIFO, SCHED_RR) have a sched_priority value in the range 1 (low) to 99 (high). (As the numbers imply, real-time processes always have higher priority than normal processes.) The following "real-time" policies are also supported, for special time-critical applications that need precise control over the way in which runnable processes are selected for execution:

Currently, Linux supports the following "normal" (i.e., non-real-time) scheduling policies:

- SCHED_OTHER: Default Linux time-sharing scheduling

- The standard round-robin time-sharing policy

- SCHED_BATCH: Scheduling batch processes

- This policy is useful for workloads that are non-interactive, but do not want to lower their nice value, and for workloads that want a deterministic scheduling policy without interactivity causing extra preemptions (between the workload's tasks).

- SCHED_IDLE: Scheduling very low priority jobs

- This policy is intended for running jobs at extremely low priority (lower even than a +19 nice value with the SCHED_OTHER or SCHED_BATCH policies)

- SCHED_FIFO: First In-First Out scheduling

- A first-in, first-out policy

- SCHED_RR: Round Robin scheduling

- A round-robin policy.

Scheduling Classes

IOPRIO_CLASS_RT- This is the realtime io class. The RT scheduling class is given first access to the disk, regardless of what else is going on in the system. Thus the RT class needs to be used with some care, as it can starve other processes. As with the best effort class, 8 priority levels are defined denoting how big a time slice a given process will receive on each scheduling window. This scheduling class is given higher priority than any other in the system, processes from this class are given first access to the disk every time. Thus it needs to be used with some care, one io RT process can starve the entire system. Within the RT class, there are 8 levels of class data that determine exactly how much time this process needs the disk for on each service. In the future this might change to be more directly mappable to performance, by passing in a wanted data rate instead.

IOPRIO_CLASS_BE- This is the best-effort scheduling class, which is the default for any process that hasn't set a specific io priority. This is the default scheduling class for any process that hasn't asked for a specific io priority. Programs inherit the CPU nice setting for io priorities. This class takes a priority argument from 0-7, with lower number being higher priority. Programs running at the same best effort priority are served in a round-robin fashion. The class data determines how much io bandwidth the process will get, it's directly mappable to the cpu nice levels just more coarsely implemented. 0 is the highest BE prio level, 7 is the lowest. The mapping between cpu nice level and io nice level is determined as: io_nice = (cpu_nice + 20) / 5.

IOPRIO_CLASS_IDLE- This is the idle scheduling class, processes running at this level only get io time when no one else needs the disk. A program running with idle io priority will only get disk time when no other program has asked for disk io for a defined grace period. The impact of idle io processes on normal system activity should be zero. This scheduling class does not take a priority argument. The idle class has no class data, since it doesn't really apply here.

ionice

ionice - get/set program io scheduling class and priority. This program sets the io scheduling class and priority for a program. Since v3 (aka CFQ Time Sliced) CFQ implements I/O nice levels similar to those of CPU scheduling. These nice levels are grouped in three scheduling classes each one containing one or more priority levels:

ionice usage

If no arguments or just -p is given, ionice will query the current io scheduling class and priority for that process.

ionice [-c] [-n] [-p] [COMMAND [ARG...]]

- -c - The scheduling class. 1 for real time, 2 for best-effort, 3 for idle.

- -n - The scheduling class data. This defines the class data, if the class accepts an argument. For real time and best-effort, 0-7 is valid data.

- -p - Pass in a process pid to change an already running process. If this argument is not given, ionice will run the listed program with the given parameters.

ionice Examples

Sets process with PID 89 as an idle io process.

ionice -c3 -p89

Runs 'bash' as a best-effort program with highest priority.

ionice -c2 -n0 bash

Returns the class and priority of the process with PID 89

ionice -p89

With the ionice command, you can set the IO priority for a process to one of three classes: Idle (3), Best Effort (2), and Real Time (1). The Idle class means that the process will only be able to read and write to the disk when all other processes are not using the disk. The Best Effort class is the default and has eight different priority levels from 0 (top priority) to 7 (lowest priority). The Real Time class results in the process having first access to the disk irregardless of other process and should never be used unless you know what you are doing.

If we wish to run the updatedb process in the background with an Idle IO class priority, we can run the following:

$ sudo date $ sudo updatedb & [1] 16324 $ sudo ionice -c3 -p16324If we’d rather just lower the Best Effort class priority (defaults to 4) for the command so the process isn’t limited to idle IO periods, we can run the following:

$ sudo date $ sudo updatedb & [1] 16324 $ sudo ionice -c2 -n7 -p16324Again, the Real Time class should not be used as it can prevent you from being able to interact with your system.

You may wonder where you can get the process ID if you don’t know it, can’t remember it, or didn’t start the process (an automatted script may have launched it). You can find process IDs with the ps command.

For example, if I had an updatedb program running in the background, and I wanted to find its process ID, I can run the following:

$ ps -C updatedb PID TTY TIME CMD 4234 ? 00:00:42 updatedbThis tells me that the process’ process ID (PID) is 4234.

iostat

iostat Usage

iostat [ -c ] [ -d ] [ -N ] [ -n ] [ -h ] [ -k | -m ] [ -t ] [ -V ] [ -x ] [ -z ] [[...] | ALL ] [ -p [ [,...] | ALL ] ] [ [ ] ] -c The -c option is exclusive of the -d option and displays only the CPU usage report. -d The -d option is exclusive of the -c option and displays only the device utilization report. -k Display statistics in kilobytes per second instead of blocks per second. Data displayed are valid only with kernels 2.4 and newer. -m Display statistics in megabytes per second instead of blocks or kilobytes per second. Data displayed are valid only with kernels 2.4 and newer. -n Displays the NFS-directory statistic. Data displayed are valid only with kernels 2.6.17 and newer. This option is exclusive ot the -x option. -h Display the NFS report more human readable. -p [ { device | ALL } ] The -p option is exclusive of the -x option and displays statistics for block devices and all their partitions that are used by the system. -t Print the time for each report displayed. -x Display extended statistics.

iostat Examples

iostat -p ALL 2 1000

avg-cpu: %user %nice %sys %iowait %idle

8.34 0.08 1.26 2.27 88.05

Display a single history since boot report for all CPU and Devices.

$ iostat

Display a continuous device report at two second intervals.

$ iostat -d 2

Display six reports at two second intervals for all devices.

$ iostat -d 2 6

Display six reports of extended statistics at two second intervals for devices hda and hdb.

$ iostat -x hda hdb 2 6

Display six reports at two second intervals for device sda and all its partitions (sda1, etc.)

$ iostat -p sda 2 6

Schedule Utils

These are the Linux scheduler utilities - schedutils for short. These programs take advantage of the scheduler family of syscalls that Linux implements across various kernels. These system calls implement interfaces for scheduler-related parameters such as CPU affinity and real-time attributes. The standard UNIX utilities do not provide support for these interfaces -- thus this package.

The programs that are included in this package are chrt and taskset. Together with nice and renice (not included), they allow full control of process scheduling parameters. Suggestions for related utilities are welcome, although it is believed (barring new interfaces) that all scheduling interfaces are covered.

I've found that quite a few servers do not have this package installed, indicating to you that they might not know what they are doing. Here is how you can install this incredible package, for non-root users. Root users know how to do this, or they shouldn't be root. Download and install in 1 line provided you have curl. Or just use the following commands.

mkdir -pv $HOME/{dist,source,bin,share/man/man1} && cd ~/dist && curl -O http://ftp.de.debian.org/debian/pool/main/s/schedutils/schedutils_1.5.0.orig.tar.gz && cd ~/source && tar -xvzf ~/dist/sch*z && cd sch* && sed -i -e 's,= /usr/local,=${HOME},g' Makefile && make && make install && make installdoc

mkdir -pv $HOME/{dist,source,bin,share/man/man1}

cd ~/dist && curl -O http://ftp.de.debian.org/debian/pool/main/s/schedutils/schedutils_1.5.0.orig.tar.gz

cd ~/source && tar -xvzf ~/dist/schedutils_1.5.0.orig.tar.gz

cd ~/source/schedutils-1.5.0 && sed -i -e 's,= /usr/local,=${HOME},g' Makefile

make || make -d && make install || make install -d && make installdoc || make installdoc -d

taskset

Taskset is used to set or retrieve the CPU affinity of a running process given its PID or to launch a new COMMAND with a given CPU affinity. CPU affinity is a scheduler property that "bonds" a process to a given set of CPUs on the system. The Linux scheduler will honor the given CPU affinity and the process will not run on any other CPUs. Note that the Linux scheduler also supports natural CPU affinity: the scheduler attempts to keep processes on the same CPU as long as practical for performance reasons. Therefore, forcing a specific CPU affinity is useful only in certain applications.

The CPU affinity is represented as a bitmask, with the lowest order bit corresponding to the first logical CPU and the highest order bit corresponding to the last logical CPU. Not all CPUs may exist on a given system but a mask may specify more CPUs than are present. A retrieved mask will reflect only the bits that correspond to CPUs physically on the system. If an invalid mask is given (i.e., one that corresponds to no valid CPUs on the current system) an error is returned. A user must possess CAP_SYS_NICE to change the CPU affinity of a process. Any user can retrieve the affinity mask.

taskset Usage

taskset [options] [mask | cpu-list] [pid | cmd [args...]] -p, --pid operate on existing given pid -c, --cpu-list display and specify cpus in list format

taskset-examples

The default behavior is to run a new command:

$ taskset 03 sshd -b 1024

You can retrieve the mask of an existing task or set it:

$ taskset -p 700 $ taskset -p 03 700

List format uses a comma-separated list instead of a mask:

$ taskset -pc 0,3,7-11 700

chrt

chrt sets or retrieves the real-time scheduling attributes of an existing PID or runs COMMAND with the given attributes. Both policy (one of SCHED_FIFO, SCHED_RR, or SCHED_OTHER) and priority can be set and retrieved. A user must possess CAP_SYS_NICE to change the scheduling attributes of a process. Any user can retrieve the scheduling information.

chrt Usage

chrt [options] [prio] [pid | cmd [args...]] -p, --pid operate on an existing PID and do not launch a new task -f, --fifo set scheduling policy to SCHED_FIFO -m, --max show minimum and maximum valid priorities, then exit -o, --other set policy scheduling policy to SCHED_OTHER -r, --rr set scheduling policy to SCHED_RR (the default)

chrt Examples

The default behavior is to run a new command: chrt [prio] -- [command] [arguments]

You can also retrieve the real-time attributes of an existing task:

chrt -p [pid]

Or set them:

chrt -p [prio] [pid]

ulimit - get and set user limits

Ulimit provides control over the resources available to processes started by the shell, on systems that allow such control. One can set the resource limits of the shell using the built-in ulimit command. The shell's resource limits are inherited by the processes that it creates to execute commands.

ulimit Usage

ulimit [-SHacdfilmnpqstuvx] [limit]

- -S

- use the `soft' resource limit

- -H

- use the `hard' resource limit

- -a

- all current limits are reported

- -c

- the maximum size of core files created

- -d

- the maximum size of a process's data segment

- -f

- the maximum size of files created by the shell

- -l

- the maximum size a process may lock into memory

- -m

- the maximum resident set size

- -n

- the maximum number of open file descriptors

- -p

- the pipe buffer size

- -s

- the maximum stack size

- -t

- the maximum amount of cpu time in seconds

- -u

- the maximum number of user processes

- -v

- the size of virtual memory

If LIMIT is given, it is the new value of the specified resource; the special LIMIT values `soft', `hard', and `unlimited' stand for the current soft limit, the current hard limit, and no limit, respectively. Otherwise, the current value of the specified resource is printed. If no option is given, then -f is assumed. Values are in 1024-byte increments, except for -t, which is in seconds, -p, which is in increments of 512 bytes, and -u, which is an unscaled number of processes.

- RLIMIT_AS

- The maximum size of the process's virtual memory (address space) in bytes. This limit affects calls to brk(2), mmap(2) and mremap(2), which fail with the error ENOMEM upon exceeding this limit. Also automatic stack expansion will fail (and generate a SIGSEGV that kills the process if no alternate stack has been made available via sigaltstack(2)). Since the value is a long, on machines with a 32-bit long either this limit is at most 2 GiB, or this resource is unlimited.

- RLIMIT_CORE

- Maximum size of core file. When 0 no core dump files are created. When non-zero, larger dumps are truncated to this size.

- RLIMIT_CPU CPU

- time limit in seconds. When the process reaches the soft limit, it is sent a SIGXCPU signal. The default action for this signal is to terminate the process. However, the signal can be caught, and the handler can return control to the main program. If the process continues to consume CPU time, it will be sent SIGXCPU once per second until the hard limit is reached, at which time it is sent SIGKILL. (This latter point describes Linux 2.2 through 2.6 behavior. Implementations vary in how they treat processes which continue to consume CPU time after reaching the soft limit. Portable applications that need to catch this signal should perform an orderly termination upon first receipt of SIGXCPU.)

- RLIMIT_DATA

- The maximum size of the process's data segment (initialized data, uninitialized data, and heap). This limit affects calls to brk(2) and sbrk(2), which fail with the error ENOMEM upon encountering the soft limit of this resource.

- RLIMIT_FSIZE

- The maximum size of files that the process may create. Attempts to extend a file beyond this limit result in delivery of a SIGXFSZ signal. By default, this signal terminates a process, but a process can catch this signal instead, in which case the relevant system call (e.g., write(2), truncate(2)) fails with the error EFBIG.

- RLIMIT_LOCKS

- (Early Linux 2.4 only) A limit on the combined number of flock(2) locks and fcntl(2) leases that this process may establish.

- RLIMIT_MEMLOCK

- The maximum number of bytes of memory that may be locked into RAM. In effect this limit is rounded down to the nearest multiple of the system page size. This limit affects mlock(2) and mlockall(2) and the mmap(2) MAP_LOCKED operation. Since Linux 2.6.9 it also affects the shmctl(2) SHM_LOCK operation, where it sets a maximum on the total bytes in shared memory segments (see shmget(2)) that may be locked by the real user ID of the calling process. The shmctl(2) SHM_LOCK locks are accounted for separately from the per-process memory locks established by mlock(2), mlockall(2), and mmap(2) MAP_LOCKED; a process can lock bytes up to this limit in each of these two categories. In Linux kernels before 2.6.9, this limit controlled the amount of memory that could be locked by a privileged process. Since Linux 2.6.9, no limits are placed on the amount of memory that a privileged process may lock, and this limit instead governs the amount of memory that an unprivileged process may lock.

- RLIMIT_MSGQUEUE

- (Since Linux 2.6.8) Specifies the limit on the number of bytes that can be allocated for POSIX message queues for the real user ID of the calling process. This limit is enforced for mq_open(3). Each message queue that the user creates counts (until it is removed) against this limit according to the formula:

bytes = attr.mq_maxmsg * sizeof(struct msg_msg *) + attr.mq_maxmsg * attr.mq_msgsizewhere attr is the mq_attr structure specified as the fourth argument to mq_open(3). The first addend in the formula, which includes sizeof(struct msg_msg *) (4 bytes on Linux/i386), ensures that the user cannot create an unlimited number of zero-length messages (such messages nevertheless each consume some system memory for bookkeeping overhead). - RLIMIT_NICE

- (since Linux 2.6.12, but see BUGS below) Specifies a ceiling to which the process's nice value can be raised using setpriority(2) or nice(2). The actual ceiling for the nice value is calculated as 20 - rlim_cur. (This strangeness occurs because negative numbers cannot be specified as resource limit values, since they typically have special meanings. For example, RLIM_INFINITY typically is the same as -1.)

- RLIMIT_NOFILE

- Specifies a value one greater than the maximum file descriptor number that can be opened by this process. Attempts (open(2), pipe(2), dup(2), etc.) to exceed this limit yield the error EMFILE. (Historically, this limit was named RLIMIT_OFILE on BSD.)

- RLIMIT_NPROC

- The maximum number of processes (or, more precisely on Linux, threads) that can be created for the real user ID of the calling process. Upon encountering this limit, fork(2) fails with the error EAGAIN.

- RLIMIT_RSS

- Specifies the limit (in pages) of the process's resident set (the number of virtual pages resident in RAM). This limit only has effect in Linux 2.4.x, x < 30, and there only affects calls to madvise(2) specifying MADV_WILLNEED.

- RLIMIT_RTPRIO

- (Since Linux 2.6.12, but see BUGS) Specifies a ceiling on the real-time priority that may be set for this process using sched_setscheduler(2) and sched_setparam(2).

- RLIMIT_RTTIME

- (Since Linux 2.6.25) Specifies a limit on the amount of CPU time that a process scheduled under a real-time scheduling policy may consume without making a blocking system call. For the purpose of this limit, each time a process makes a blocking system call, the count of its consumed CPU time is reset to zero. The CPU time count is not reset if the process continues trying to use the CPU but is preempted, its time slice expires, or it calls sched_yield(2). Upon reaching the soft limit, the process is sent a SIGXCPU signal. If the process catches or ignores this signal and continues consuming CPU time, then SIGXCPU will be generated once each second until the hard limit is reached, at which point the process is sent a SIGKILL signal. The intended use of this limit is to stop a runaway real-time process from locking up the system.

- RLIMIT_SIGPENDING

- (Since Linux 2.6.8) Specifies the limit on the number of signals that may be queued for the real user ID of the calling process. Both standard and real-time signals are counted for the purpose of checking this limit. However, the limit is only enforced for sigqueue(2); it is always possible to use kill(2) to queue one instance of any of the signals that are not already queued to the process.

- RLIMIT_STACK

- The maximum size of the process stack, in bytes. Upon reaching this limit, a SIGSEGV signal is generated. To handle this signal, a process must employ an alternate signal stack (sigaltstack(2)).

ulimit Examples

Turn off core dumps

ulimit -S -c 0

More Reading

- Please see the SYSSTAT Utilities Home for more performance monitoring tools like sar, sadf, mpstat, iostat, pidstat and sa tools.

- Multitasking from the Linux Command Line + Process Prioritization

Man Pages

Kernel Documentation

- information on schedstats (Linux Scheduler Statistics)

- real-time group scheduling

- How and why the scheduler's nice levels are implemented

- information on scheduling domains

- goals, design and implementation of the Complete Fair Scheduler

Future Discussions:

« Windows Optimization – Intense Part IIFirefox Add-ons for Web Developers »

Comments